One of the key interests of a theology of technology often comes down to what is good technology and what is bad technology. Others have had a crack at this so I might as well as well. In the philosophy of technology there are all sorts of interesting ideas, but in this blog I don't want to go there. I first want to look at the practical level and begin building a model from which I will discuss the philosophers like Borgmann and Ellul at some point.

If you are at all sanguine about technology this book makes for an interesting read. Ruth Conway neither presents a systematic review of the choices nor a balanced account of the good and bad of technology. As technology is often contradictory in its effects it is not easy to pin down and it is possible to only highlight either the good or the bad.

Take the example of botox - known to many as a useless technology that wastes money with the rich using it to look well, silly IMHO. However, botox also has serious medical uses and the costs of the serious use is reduced by it beging so widely available for cosmetic use.

Often we are aware of negative feedback loops in technology but here is an example where there is a positive one at the cost to people with too much money.

Now it is possible to simply react to this complexity and personalise technology like Wan does here . I know Wan and appreciate his insights but for me this doesn't seem to be completely appropriate. Just like purely privatising your Christian faith doesn't work then privatising your choices about technology ignores big factors like pollution or labour conditions that are external to your individual choices. Nevertheless, Wan's response is better than this one - which ignores too many factors.

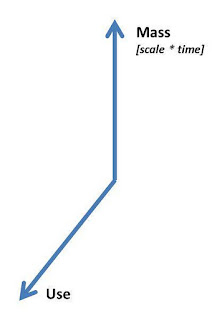

But, it is clear that we need a use dimension.

The problem is that with some technologies, even if we choose to use them for the right reasons personally there are what economists call externalities and these can actually get bigger faster than the rate at which use increases because the environment isn't infinite. So for example I might think I need a car to get to work, but if we had 3 billion people making similar choices we would be in a real mess. Better than simply calling these externalities which has some good and bad rationales attached to it, we need to develop better awareness of feedback loops and unintended consequenses.

If we use anti-biotics unwisely we will end up with untreatable diseases again.

If we build roads we promote car use

etc etc etc...

So we need a scale index on our map.

But as the work of Edward Tenner on unintended consequenses suggests we can learn. We as humans do learn at lest a little bit from our mistakes and then in hubris we make new mistakes. Unfortunately, we don't widely teach a theory of knowledge which goes along the idea of knowns, think we know, know we don't know and the unknown unknowns.

Science as a field of endeavour loves to work on the middle two - trying to prove true or false existing knowledge and trying to discover new 'truths'. However, we typically get blindsided by the unexpected (often the unlinked interaction effects). Often this interaction effects involve human behaviour and this is where the big mistake of the current attitudes to research come in. Countries spend vast sums of money looking to find the next drug to 'cure' some disease or condition and spend miniscule amounts on the social and behaviour issues that go alongside medical practice. Social science is an afterthought, yet that is where the big unknowns lie.

Nevertheless, we can add in a learning dimension to our framework.

To illustrate the point about learning - let's use a really bad technology. Thalidomide in the 1950s was given to patients as a sedative but resulted in severe birth defects when taken by pregnant women. As a result it was banned. So who could image that such a dangerous drug might have positives. As scientists continued to research the drug they discovered a number of important benefits.

That is the problem of technology - what is bad in one context can be good in other, what is useful to some becomes a harm if too many engage in the same practice.

Technologies can be at multiple places in this 3D box simultaneously - we need to think of choices something like a Rubik's cube. .

The technological world is not just a series of unconnected artifacts without values. Philosophers of technology and science, technology and society scholars have long been interested in the connections between people and the artifacts they create and use. This blog is focussed on how Christians engage and disengage with the technologies around them. My goal is not to focus too much on one class of technologies (say electronics) but instead to focus on the depth of our technological systems.